Now Reading: The Engine of Imagination: A Complete Technical Deep Dive into Sora 2.0 and the Future of Video AI

-

01

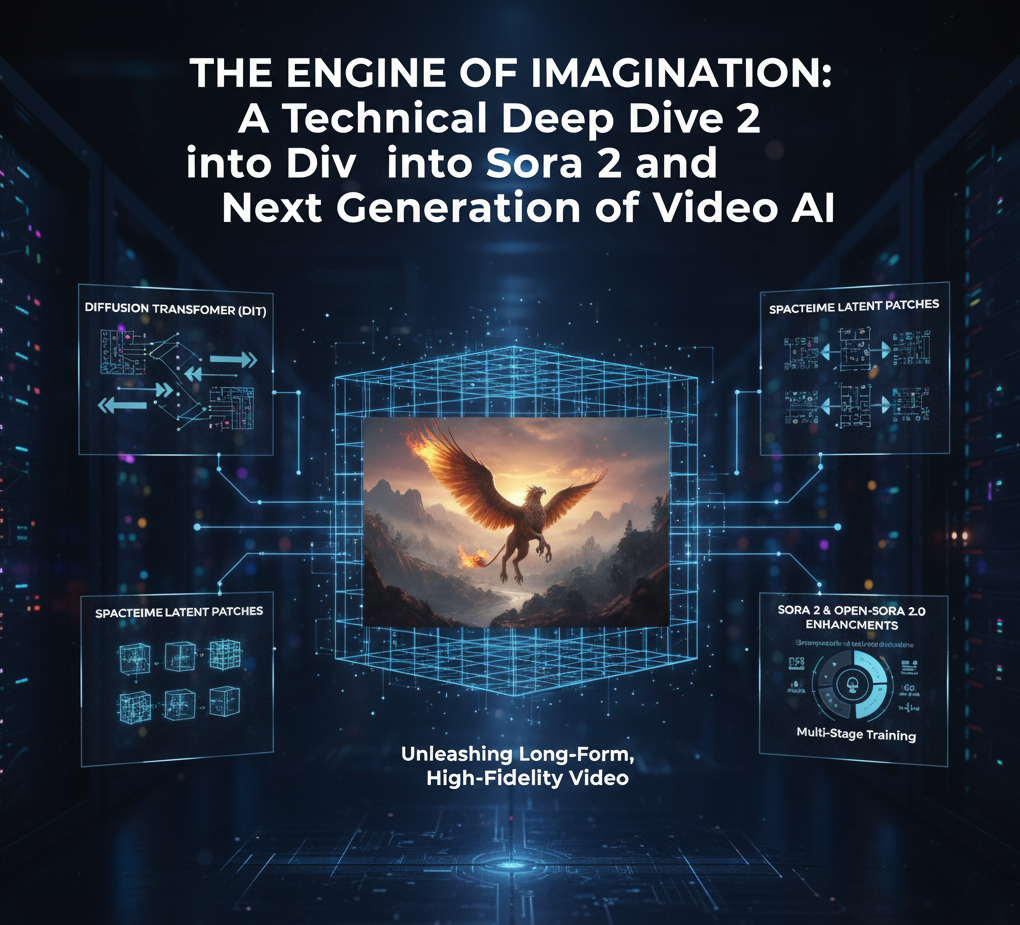

The Engine of Imagination: A Complete Technical Deep Dive into Sora 2.0 and the Future of Video AI

The Engine of Imagination: A Complete Technical Deep Dive into Sora 2.0 and the Future of Video AI

OpenAI’s Sora changed the direction of video generation. Sora 2.0 pushed that shift even further. Alongside it, the open-source community introduced Open-Sora 2.0. These two models show how fast text-to-video systems are evolving. Both versions represent a new approach to motion, realism, physics, audio, and long-range coherence.

(For readers who want to understand how fast inference engines work, the insights from OpenAI’s real time reasoning architecture provide valuable context.)

This guide explains the full technical architecture of Sora 2.0. It also compares it with Open-Sora 2.0 and highlights the innovations that make this new wave of video models more stable and more controllable than previous systems.

1. The Core Architecture: Diffusion Transformer (DiT)

Sora 2.0 uses a Diffusion Transformer. This architecture combines two powerful ideas.

Diffusion models provide high fidelity. Transformers provide long-range reasoning and scalability. Together they achieve high-resolution output with smooth motion, consistent objects, and stable scenes.

1.1 How the diffusion process works

The model works backwards from noise.

Here is the process in simple terms.

- It starts with a large block of random static in latent space.

- The model predicts what noise should be removed at each step.

- Each step produces a cleaner version of the video latent.

- After many cycles, the final latent is decoded into a complete high-resolution video.

This reverse denoising allows the model to create motion that is detailed and physically believable.

1.2 Why Sora 2.0 uses a Transformer

Older video generators used U-Net architectures.

Transformers offer several advantages.

- They scale better with data, size, and compute.

- They allow global attention. This means the model looks at all parts of the video at once.

- They maintain coherence across long videos.

- They unify learning of spatial and temporal dependencies.

The result is smoother motion, less flickering, and more consistent objects across frames.

2. Unified Data Representation: Spacetime Latent Patches

One of the most important innovations in Sora and Open-Sora is the representation of video as “spacetime patches”.

2.1 The Video Compression Autoencoder (VAE)

Before the Transformer sees any data, a Video Autoencoder compresses the raw video.

It performs two compressions.

- Spatial compression reduces resolution.

- Temporal compression reduces the total number of frames.

This makes training efficient without losing important details.

2.2 Spacetime patches explained

After compression, the latent space is split into small 3D cubes.

- These patches act like tokens in a language model.

- Each patch contains width, height, and time.

- The model can handle any aspect ratio or video length.

- The size of the noise grid determines the size of the output video.

This architecture removes old limitations. Sora can generate long videos or vertical videos without resizing or cropping.

3. Sora 2.0 vs Open-Sora 2.0: Architectural Enhancements

Both models improve realism, control, and efficiency but follow different design routes.

Here is a detailed comparison.

Comparison Table

| Feature | Sora 2.0 (OpenAI) | Open-Sora 2.0 (Open Source) |

|---|---|---|

| Realism | Stronger physics and sharper details | Uses high-compression autoencoder to increase fidelity |

| Audio | Adds synchronized audio | Planned for future updates |

| Consistency | Strong temporal coherence and editing controls | Hybrid Transformer (MMDiT-inspired) improves cross-modal representation |

| Training | Recaptioning with DALL-E 3 methods | Low-resolution T2V first, then high-res fine tuning |

| Data filtering | Curated internal datasets | Hierarchical filtering for open-source video |

| Cost | Massive internal compute | Achieved near-commercial quality for about $200k |

Both show that video generation is moving toward generalist models that understand physics, motion, and camera behavior.

4. Technical Innovations in Detail

4.1 Realism and physics

Sora 2.0 learns physical relationships.

Examples include:

- believable gravity

- realistic collisions

- accurate shadows

- fluid movement

- correct object deformation

Open-Sora 2.0 achieved similar realism by refining its video autoencoder and borrowing from powerful image models like FLUX.

4.2 Audio generation

Sora 2.0 can generate aligned audio.

This includes ambient sound, movement, and speech patterns.

Audio is synchronized with object motion to create a more cinematic experience.

4.3 Control and editing

Sora 2.0 offers several control mechanisms.

- Object replacement

- Cinematic editing controls

- Draft previews for faster iteration

- Better adherence to prompts

- Fewer distortions in long scenes

4.4 Hybrid Transformer structure in Open-Sora

The open-source version uses a hybrid approach with:

- dual-stream blocks for separate processing of text and video

- single-stream blocks for cross-modal fusion

- 3D rotary position embeddings for smoother motion understanding

This improves stability while keeping training lightweight.

4.5 Multi-stage training strategy

Open-Sora uses a two-stage training method.

- Train low-resolution motion.

- Fine-tune high-resolution details.

This saves compute while still producing strong results.

5. Key Takeaways and Future Implications

The innovations in Sora 2.0 and Open-Sora show where video AI is heading.

- Model scaling is central. Larger DiT models produce higher quality.

- Spacetime patches unify all video and image learning.

- Cost-efficient training methods allow open-source competitors to catch up.

- Real-world physics simulation is becoming a standard requirement.

- Long videos with strong temporal consistency are now reachable.

Sora 2.0’s design shows a future where video generation becomes a core tool for film, advertising, gaming, and simulation.

People Also Ask: Key Questions Answered

How is Sora 2.0 different from earlier video generators?

Sora 2.0 uses a Diffusion Transformer that provides stronger motion stability and global attention. This creates smoother videos with fewer artifacts and more consistent details across long sequences.

Why are spacetime patches important?

They allow the model to treat video as a sequence of 3D tokens. This solves issues with aspect ratios, duration limits, and resizing. It makes training more flexible and improves scene coherence.

Does Sora 2.0 require large compute resources?

Yes. Sora 2.0 relies on extensive internal compute. Open-Sora 2.0 shows that similar results are possible with less cost by using staged training and open-source image backbones.

How accurate is Sora 2.0’s physics simulation?

The model learns physical patterns directly from large-scale video data. It handles collisions, fluids, shadows, and motion more convincingly than earlier systems.

Can Sora 2.0 generate different aspect ratios or long videos?

Yes. The noise grid defines the output size. This allows the model to produce vertical videos, square formats, or long cinematic sequences without retraining.

Frequently Asked Questions (SEO-Optimized)

What is Sora 2.0?

It is OpenAI’s latest text-to-video model that uses a Diffusion Transformer to generate realistic videos with strong physics and motion coherence.

How does Sora 2.0 generate video?

It starts with latent noise. It removes noise step by step through a reverse diffusion process. This produces a clean video latent that is decoded into pixel space.

What makes Sora 2.0 better than Sora 1.0?

It has improved physics, synchronized audio, better editing controls, and longer video stability.

Is Open-Sora 2.0 as powerful as Sora 2.0?

It approaches similar performance but uses a more cost-efficient training approach. It is open source and easier to experiment with.

Can Sora 2.0 be used for film or commercial projects?

Yes. The model aims for high realism and consistent motion, making it suitable for creative production and commercial use cases.

Pingback: Google's Quantum Breakthrough & $15B AI Data Center in India