Now Reading: P-Computers: The Revolutionary Probabilistic Computing Architecture Challenging Quantum Supremacy

-

01

P-Computers: The Revolutionary Probabilistic Computing Architecture Challenging Quantum Supremacy

P-Computers: The Revolutionary Probabilistic Computing Architecture Challenging Quantum Supremacy

1. Introduction

P-computers represent a new class of computing machines built around probabilistic behavior instead of strict determinism. Rather than forcing bits to be either 0 or 1 at all times, these systems harness controlled randomness to explore many possible solutions in parallel.

Recent work from researchers at UC Santa Barbara has shown that carefully designed p-computers can outperform state of the art quantum annealers on challenging 3D spin glass optimization problems. Even more striking. they do this while running at room temperature on technology that can be manufactured using today’s semiconductor processes.

This turns p-computers into a serious classical competitor in a space that many assumed would be dominated only by quantum hardware.

2. What Are P-Computers and P-Bits

2.1 From Bits and Qubits to P-Bits

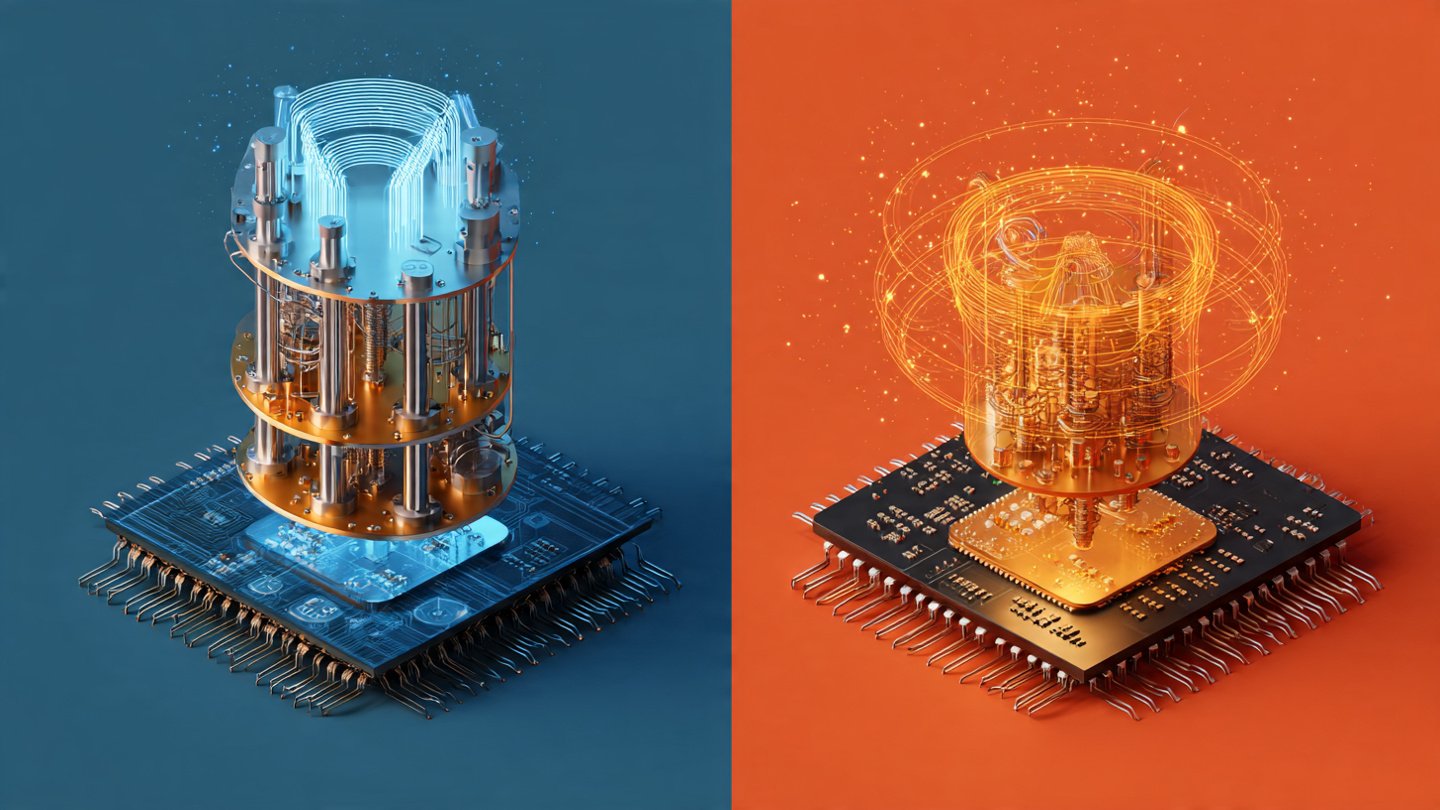

Traditional digital computers work with bits that are always 0 or 1. Quantum computers use qubits that exist in superpositions of states but require extremely low temperatures and delicate isolation to operate.

P-computers introduce a third kind of primitive. the probabilistic bit or p-bit.

A p-bit.

- Fluctuates randomly between 0 and 1.

- Has a tunable probability of being 1 at any instant.

- Interacts with other p-bits through weighted connections.

Instead of giving a single exact answer. a network of p-bits naturally samples many candidate solutions and tends to spend more time in low energy. high quality states. This makes them powerful for optimization. inference and decision making under uncertainty.

2.2 The Coupled Network View

Mathematically. each p-bit is updated according to a rule that depends on the weighted sum of signals from its neighbors.

- The weights are often written as ( J_{ij} ). similar to couplings in Ising models.

- The system behaves like a physical energy landscape. states with lower energy are more probable.

- Over time the network explores that landscape and concentrates around optimal or near optimal configurations.

Instead of writing custom solvers for every optimization problem. engineers map the problem to a p-bit network and let the hardware do the heavy lifting through its natural dynamics.

3. Hardware Implementations of P-Computers

3.1 MRAM Based P-Bits

The first experimental p-bits were demonstrated by slightly modifying MRAM devices.

By exploiting voltage controlled magnetism. researchers were able to create nano devices that switch randomly between two resistive states with a controllable bias. These serve as physical p-bits and showed impressive hardware level gains.

- Around 10× lower energy consumption compared to equivalent CMOS only implementations.

- Around 100× smaller area for the same logical functionality.

- Reliable probabilistic switching at room temperature.

MRAM based p-bits show that true probabilistic hardware can be built from emerging memory technologies without exotic fabrication steps.

3.2 FPGA Based P-Computer Accelerators

For larger prototypes and rapid experimentation. many groups use FPGAs to emulate p-bit networks.

One key example is the pc-COP system. a 2.048 p-bit probabilistic hardware accelerator targeted at combinatorial optimization problems. In benchmarks it demonstrated.

- 5–18× faster sampling than highly optimized classical software algorithms.

- Up to six orders of magnitude total performance improvement in some scenarios when considering throughput and energy.

- Full reconfigurability. the same board can be retargeted to different optimization tasks.

These FPGA based p-computers act as a bridge between pure simulation and future custom ASIC p-computer chips.

3.3 Synchronous vs Asynchronous Architectures

There are two main ways to update p-bits in hardware.

- Asynchronous architectures. each p-bit updates independently at its own pace. This is closer to physical systems and simple to reason about.

- Synchronous architectures. many or all p-bits are updated in parallel according to a global clock. like “dancers moving in lockstep”. This can be more convenient for digital design and pipelining.

Studies show that both approaches can reach similar solution quality. with synchronous designs often winning on implementation efficiency and throughput.

4. Sparse Ising Machines. The Core Innovation

One of the breakthrough ideas in modern p-computer design is the sparse Ising machine or sIm.

In a naïve fully connected network every p-bit talks to every other p-bit. That becomes expensive and slow as you scale to thousands or millions of nodes. Sparse Ising machines take a different approach.

- Each p-bit is only connected to a small set of neighbors.

- You can think of each p-bit as a person who relies on a few trusted friends instead of listening to everyone at once.

- This limited but carefully designed connectivity still captures the structure of the optimization problem.

The key metric here is flips per second. how many intelligent state updates the system can perform. Sparse connectivity lets this rate scale almost linearly with the number of p-bits. which unlocks massive parallelism on large chips.

5. Beating Quantum Annealers on Spin Glass Problems

5.1 Results on 3D Spin Glasses

In a 2025 study. researchers from UC Santa Barbara showed that p-computers can match and surpass leading quantum annealers on difficult 3D spin glass optimization problems. These problems are often used as benchmarks for quantum advantage claims.

By tightly co designing algorithms and hardware they ran.

- Discrete time simulated quantum annealing on p-computer hardware.

- Adaptive parallel tempering strategies that exploit many p-bits in parallel.

The result. classical p-computers not only remained competitive. they outperformed specialized quantum machines on key metrics like time to solution and sampling quality.

5.2 Scaling to Millions of P-Bits

Using realistic device and interconnect models. the same team simulated p-computer chips with around 3 million p-bits and found.

- Such chips could be fabricated using existing industrial processes from foundries like TSMC.

- Algorithmic performance scales extremely well with the number of p-bits. due to the sparse Ising design.

- Energy per useful sample drops as the system grows. because parallelism is exploited more efficiently.

In other words, this is not a far future fantasy. It is a credible roadmap for near term specialized accelerators.

6. Key Technical Applications

6.1 Invertible Logic and P-Circuits

P-computers enable a powerful concept called invertible logic. implemented using probabilistic circuits or p-circuits.

A single p-circuit can operate in three modes.

- Direct mode. inputs are clamped and the output converges to the correct result.

- Inverted mode. the output is clamped and the circuit fluctuates among all input combinations that are consistent with that output. This effectively performs hardware level constraint solving or factoring.

- Floating mode. neither inputs nor outputs are clamped. and the circuit wanders among all valid input output pairs.

Hardware demonstrations include.

- A 4 bit ripple carry adder implemented with 48 p-bits.

- A 4 bit multiplier with 46 p-bits used as a factorizer in inverted mode.

These show that the attractive properties seen in simulations can survive in physical implementations.

6.2 Combinatorial Optimization

Many real world problems. from logistics to portfolio construction to chip placement. can be formulated as combinatorial optimization. P-computers are a natural fit here.

Because p-bits can flip in correlated ways they can probabilistically tunnel through energy barriers that trap classical simulated annealing. In some studies. p-computer based approaches have reduced the number of required sampling operations by up to 1.2 × 10⁸ in certain factorization tasks.

Instead of waiting for a single deterministic solver to grind through the search space. you get a swarm of probabilistic hardware agents exploring it in parallel.

6.3 Machine Learning and AI Acceleration

Modern AI workloads increasingly rely on probabilistic reasoning. uncertainty estimation and sampling based inference.

P-computers can act as low power probabilistic accelerators for.

- Bayesian inference and graphical models.

- Generative models that require efficient sampling.

- Reinforcement learning scenarios with large. stochastic state spaces.

With millions of p-bits on a chip. these tasks can run orders of magnitude faster and more efficiently than on general purpose CPUs or even GPUs.

7. Energy Efficiency and Practical Advantages

Quantum computers require dilution refrigerators. complex control electronics and precise calibration. All of that translates into high cost and power overhead.

P-computers flip that picture.

- They operate at room temperature.

- They can be implemented with conventional semiconductor technology plus emerging devices like MRAM.

- Sparse connectivity and p-bit multiplexing reduce the number of physical units required for a given logical problem size.

- Unlike many quantum annealers. p-computers do not need large SRAM arrays and complex readout circuits for each weight and bias.

As optimization problems grow larger. quantum systems face increasing noise and control challenges. P-computers. by contrast. can maintain or even improve their energy efficiency by exploiting more parallelism.

8. Future Outlook and Commercial Potential

The trajectory so far is clear.

- Early prototypes used only 8 p-bits.

- FPGA based systems like pc-COP scale into the thousands.

- Detailed simulations show that million scale p-computer chips are feasible with current industrial processes.

From a market and ecosystem point of view. p-computers provide a rigorous classical baseline against which any claim of quantum advantage must now be judged. If a specialized quantum machine cannot clearly beat a well engineered p-computer in speed and energy for a real world task. the case for deploying that quantum solution becomes weaker.

Looking ahead. if transistor based emulations are replaced or augmented with dense nanodevices designed specifically as p-bits. the performance gap could widen dramatically. That would position p-computers as a foundational technology for the next generation of optimization. simulation and AI workloads.

9. Frequently Asked Questions About P-Computers

What is a p-computer in simple terms

A p-computer is a specialized processor built from probabilistic bits that randomly flip between 0 and 1 with controllable bias. By wiring many of these p-bits together. the system naturally explores many possible solutions to a problem and spends more time in the best ones.

How are p-computers different from quantum computers

Quantum computers rely on qubits. superposition and entanglement. and usually need cryogenic temperatures. P-computers run at room temperature on classical hardware but still handle uncertainty and sampling very efficiently. In some optimization benchmarks. p-computers already beat state of the art quantum annealers.

Why are p-computers interesting for AI and machine learning

Many AI tasks are fundamentally probabilistic. Models need to reason about distributions. missing information and multiple plausible futures. P-computers can implement these probabilistic operations directly in hardware. acting as fast and energy efficient coprocessors for AI systems.

Can p-computers replace general purpose CPUs

P-computers are domain specific accelerators. They are not designed to run operating systems or standard applications. Instead. they complement CPUs and GPUs by offloading the hardest optimization and probabilistic inference tasks.

10. Conclusion

P-computers show that you do not always need fragile quantum hardware to achieve breakthroughs in hard optimization and probabilistic computing. By embracing controlled randomness through p-bits. and by using architectures like sparse Ising machines. engineers have opened a practical path to large scale. room temperature accelerators that directly challenge quantum supremacy narratives.

As research continues and dedicated p-computer chips reach the market. these probabilistic machines are likely to become an important pillar of future AI. optimization and scientific computing infrastructures.