Now Reading: OpenClaw AI: From Niche Tool to Global Phenomenon, Navigating the Cybersecurity Frontier

-

01

OpenClaw AI: From Niche Tool to Global Phenomenon, Navigating the Cybersecurity Frontier

OpenClaw AI: From Niche Tool to Global Phenomenon, Navigating the Cybersecurity Frontier

The world of artificial intelligence is moving at an unprecedented pace, and few developments exemplify this more than the meteoric rise of OpenClaw AI. As of February 03, 2026, this open-source autonomous AI personal assistant has not only captured immense public attention but also sparked crucial debates surrounding its rapid adoption and inherent cybersecurity implications.

The Genesis: From Clawdbot to OpenClaw’s Ascent

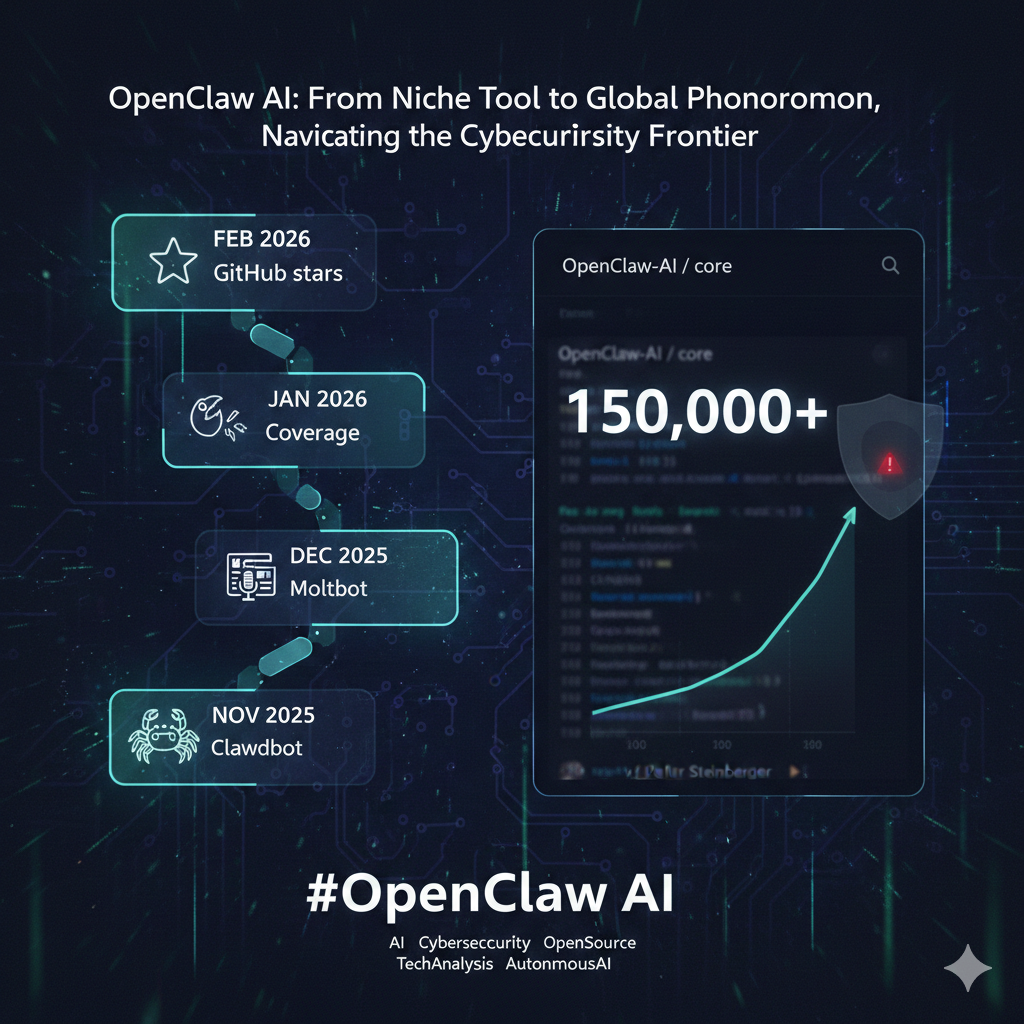

The journey of OpenClaw AI began quietly, but with significant potential. Looking back to November 2025, Austrian researcher Peter Steinberger, known for his work with PSPDFKit, unveiled the initial software. It was then known as “Clawdbot,” marking its public debut as an innovative tool designed for local operation on user devices, capable of integrating with messaging platforms to automate real-world tasks.

This initial release acted as a catalyst, setting in motion a series of rapid developments. By December 2025, the software underwent its first significant change. Due to a trademark request from Anthropic, the company behind the AI assistant Claude, “Clawdbot” was officially renamed “Moltbot.” This rebranding highlighted the evolving landscape of AI intellectual property and the increasing attention such tools were beginning to command.

The momentum continued to build into the new year. Earlier this year, in January 2026, OpenClaw AI began to receive widespread media coverage from major publications. This attention wasn’t just about technical prowess, but showcased its practical applications, moving the discussion beyond developer circles into the broader public consciousness. However, this media spotlight also brought its inherent risks into public discourse, foreshadowing future concerns. (Source: Tier-1 Reporting)

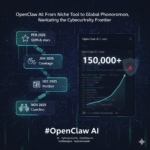

The culmination of this rapid growth arrived swiftly in February 2026. Within just days, OpenClaw AI’s GitHub repository officially surpassed an astonishing 150,000 stars. This milestone serves as a clear indicator of its exponential popularity and robust developer interest. Yet, this very success simultaneously sparked urgent cybersecurity concerns among experts, who began to voice apprehension about the software’s potential access to personal computers and the implications of its autonomous capabilities.

Understanding OpenClaw AI: A New Breed of Autonomous Assistant

So, what exactly *is* OpenClaw AI? At its core, OpenClaw AI is defined as an open-source autonomous AI personal assistant software. Its design allows it to run locally on user devices, offering a degree of privacy and control often sought after in the age of cloud-based AI. The platform’s ability to integrate with various messaging platforms to automate real-world tasks is a key differentiator, making it a powerful tool for productivity and efficiency.

This definition places OpenClaw firmly within the category of autonomous AI agents. These are AI systems designed to perceive their environment, make decisions, and take actions to achieve specific goals, all without requiring constant human intervention. The promise here is immense: an AI that can manage your calendar, draft emails, or even execute complex multi-step tasks simply by understanding your intent from a casual chat.

The Double-Edged Sword: Cybersecurity Risks on the Horizon

The very power that makes autonomous AI agents like OpenClaw so appealing also introduces significant AI cybersecurity risks. Experts are increasingly concerned about the implications of an AI agent having full computer access and the ability to execute commands autonomously. The widespread adoption of OpenClaw, evidenced by its 150,000 GitHub stars, means it is being deployed in diverse environments, significantly increasing its attack surface. This raises the likelihood of discovering novel cybersecurity risks specific to OpenClaw’s architecture or its interaction with other systems.

One of the most prominent fears revolves around prompt injection attacks. Imagine an attacker subtly manipulating the AI’s instructions to perform unintended or malicious actions. With an agent possessing full system access, the consequences could range from data breaches and system compromises to the unintended execution of harmful code. The tension between rapid innovation and the imperative for robust security measures is a defining challenge for OpenClaw AI as it matures.

Moreover, the potential for misuse of OpenClaw AI capabilities cannot be overlooked. The accessibility that led to its widespread media coverage and user adoption also means it could be intentionally or unintentionally leveraged for new security threats, such as generating sophisticated phishing attacks or automating malicious reconnaissance. This isn’t just a theoretical concern; it’s a real risk as these powerful tools become more prevalent.

The Path Forward: Securing the Autonomous Future

The trajectory for OpenClaw AI, marked by its impressive growth and the cybersecurity questions it has raised, suggests a heightened focus on security will be paramount. In the near-term, we anticipate a strong emphasis on addressing these concerns. This will likely involve the release of security patches, vulnerability disclosures, and potentially dedicated security audits for the OpenClaw AI core and its associated agents like Moltbot. Official security advisories from the OpenClaw team and increased community discussions on vulnerabilities will be key indicators.

Looking further out, the mid-term (6-18 months) will likely see OpenClaw AI integrating more robust security features directly into its core architecture. This could involve implementing secure-by-design principles for new iterations of its autonomous AI agents, focusing on measures like sandboxing, granular access control, and verifiable execution. For the broader AI industry, OpenClaw AI’s experience highlights a growing demand for powerful, accessible AI tools, but also a heightened awareness of the associated security liabilities. Stakeholders, from developers to policymakers, must recognize that widespread adoption necessitates a re-evaluation of security protocols for all AI deployments.